VIP Cup 2024 at ICIP 2024

IEEE VIP Cup 2024 at IEEE ICIP 2024

IEEE SPS Video and Image Processing Cup at IEEE ICIP 2024

SS-OCT Image Analysis

IEEE ICIP 2024 Website | 27-30 October 2024 | 2024 VIP Cup 2024 Official Document

[Sponsored by the IEEE Signal Processing Society]

Optical Coherence Tomography (OCT) is a retina non-invasive imaging technique widely used for diagnosis and treatment of many eye-related diseases. Different anomalies such as Age related Macular Degeneration (AMD), Diabetic Retinopathy (DR) or Diabetic Macular Edema (DME) can be diagnosed by OCT images. Due to the importance of early stage and accurate diagnosis of eye-related diseases, providing high resolution and clear OCT images is of high importance. Therefore, analyzing and processing of OCT images have been known as one of the important and applicable biomedical image processing research areas.

Different processings have been applied on OCT images, such as image super-resolution, image de-noising, image reconstruction, image classification and image segmentation. Despite many algorithms working on OCT image analysis, still there is a need for improving the quality of the resulting images and the accuracy of classification. Therefore, this challenge has been dedicated to the problem of OCT image enhancement and classification.

Task Description

The challenge contains the following three tasks:

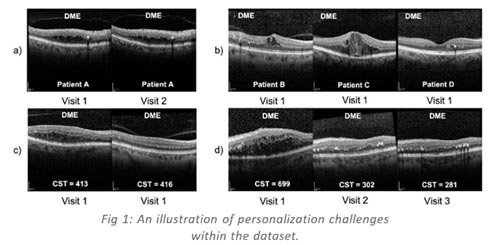

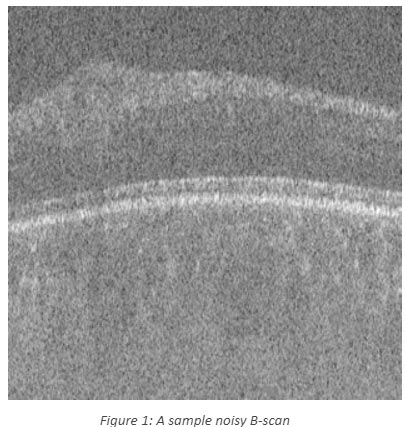

- De-noising of noisy OCT images.Since many of captured OCT images are noisy and this can highly decrease the accuracy of diagnosis of eye related diseases, de-noising can be considered as one of the important steps in OCT image analysis. Hence, this task is dedicated to the problem of OCT image de-noising. The task is to de-noise the available noisy OCT B-scans and try to produce the best results. A sample for a noisy B-scan has been shown in Figure.1

- Super-resolution. To prevent motion artifacts, capturing OCT images is usually done at rates lower than nominal sampling rate, which results in low resolution images. Using super-resolution methods, high resolution images can be reconstructed from the low resolution ones. Due to the importance of this issue, this task has been dedicated to the super-resolution problem. The aim is to obtain high-resolution OCT B-scans from low-resolution OCT B-scans.

- Volume-based classification of OCT dataset into several sub-classes. The aim of this task is to classify several observed cases (where there are several B-scans for each case) into healthy (0), diabetic patients with DME (1) and non-diabetic patients with other ocular diseases (2) classes.

Keywords:Optical Coherence Tomography (OCT), De-noising, Super-resolution, Classification.

Full technical details, dataset(s), evaluation metrics, and all other pertinent information about the competition is located in the 2024 VIP Cup Official Document.

Important Dates

- Challenge announcement: January 2024

- Release of the training dataset: 31 January 2024

- Team Registration Deadline: 30 March 2024 (Register and submit team's work)

- Release of the test-dataset 1: 30 April 2024 (Download dataset)

- Final Submission of Team’s Work Deadline: 15 June 2024

- Announcement of 3 finalist teams: 15 July 2024

- Final competition at ICIP 2024: October 27-30, 2024

IMPORTANT! The final report containing the group information, a comprehensive description of methodology, sample outputs, results of the train and test phases, evaluation criteria with selected ROC’s and any other information regarding the algorithms in addition to a link for downloading the results of the algorithm should be sent by email to, misp@mui.ac.ir.

Registration and Important Resources

Official VIP Cup Team Registration

- All teams MUST be registered through the official competition registration system before the deadline in order to be considered as a participating team. Teams also MUST acknowledge, agree to the SPS Student Terms and Conditions, and meet all eligibility requirements at the time of team registration as well as throughout the competition. The Agreement Form can be found in the Official Terms & Conditions document linked in the top section of this page.

- Registration Link: Register your team for the 2024 VIP Cup before 30 March 2024 and submit work before 15 June 2024!

- Download the training dataset!

Competition Organizers

Medical Image and Signal Processing Research Center, Isfahan University of Medical Sciences, Isfahan, Iran.

- Professor Hossein Rabbani, Medical Image and Signal Processing Research Center, Isfahan University of Medical Sciences, Isfahan, Iran

- Dr. Azhar Zam, Tandon School of Engineering, New York University, Brooklyn, NY, 11201, USA and Division of Engineering, New York University Abu Dhabi (NYUAD), Abu Dhabi, United Arab Emirates

- Dr. Farnaz Sedighin, Medical Image and Signal Processing Research Center, Isfahan University of Medical Sciences, Isfahan, Iran

- Dr. Parisa Ghaderi-Daneshmand, Medical Image and Signal Processing Research Center, Isfahan University of Medical Sciences, Isfahan, Iran

- Dr. Mahnoosh Tajmirriahi, school of Advanced Technologies in Medicine , Isfahan University of Medical Sciences, Isfahan, Iran

- Dr. Alireza Dehghani, Department of Ophthalmology, School of Medicine, Isfahan University of Medical Sciences, Isfahan, Iran and Didavaran Eye Clinic, Isfahan, Iran

- Mohammadreza Ommani, Didavaran Eye Clinic, Isfahan, Iran

- Arsham Hamidi, Biomedical Laser and Optics Group (BLOG), Department of Biomedical Engineering, University of Basel, Basel, Switzerland

Finalist Teams

Grand Prize Recipient:

Team Name: IITRPR-OCT-Diagnose-2024

University: Indian Institute of Technology Ropar

Supervisor: Dr. Puneet Goyal

Tutor: Joy Dhar

Undergraduate Students:

Ankush Naskar, Ashish Gupta, Hemlata Gautam, Satvik Srivastava, Utkarsh Patel

First Runner Up:

Team Name: Ultrabot

University: Vietnamese German University

Supervisor: Cuong Nguyen Tuan

Tutor: Chau Truong Vinh Hoang

Undergraduate Students:

Duong Tran Hai, Huy Nguyen Minh Nhat, Phuc Nguyen Song Thien, Triet Dao Hoang Minh

Second Runner Up:

Team Name: The Classifiers

University: Isfahan University of Technology

Supervisor: Mohsen Pourazizi

Tutor: Sajed Rakhshani

Undergraduate Students:

Amirali Arbab, Amirhossein Arbab, Aref Habibi

Contacts

- Competition Organizers (technical, competition-specific inquiries):

Medical Image and Signal Processing Research Center, Isfahan University of Medical Sciences, Isfahan, Iran. Email: misp@mui.ac.ir - SPS Staff (Terms & Conditions, Travel Grants, Prizes): Jaqueline Rash, SPS Membership Program and Events Administrator

- SPS Student Services Committee: Angshul Majumdar, Chair