IEEE VIP Cup 2021 at ICIP 2021

IEEE VIP Cup 2021

Privacy-Preserving In-Bed Human Pose Estimation

[Sponsored by the IEEE Signal Processing Society]ICIP 2021 Website | Sunday, September 19, 2021 | VIP Cup 2021 Website

Supported by

- Northeastern University

- ICIP committee

- IVMSP (Image, Video, and Multidimensional Signal Processing) Technical Committee

Introduction

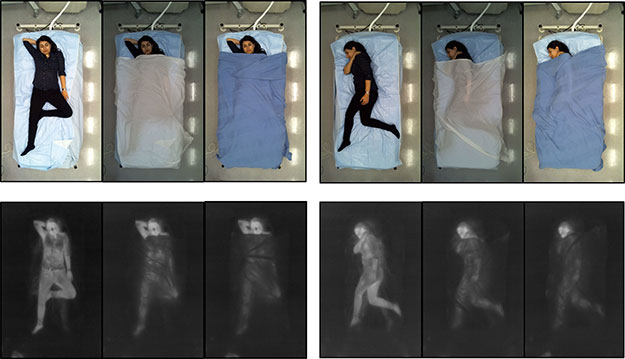

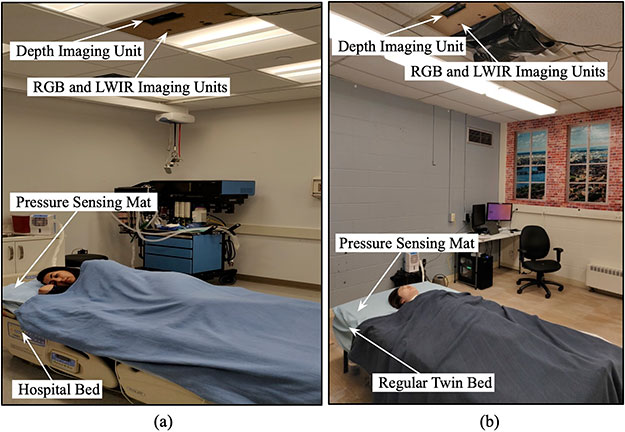

Every person spends around 1/3 of their life in bed. For an infant or a young toddler this percentage can be much higher, and for bed-bound patients it can go up to 100% of their time. Automatic non-contact human pose estimation topic has received a lot of attention/success especially in the last few years in the artificial intelligence (AI) community thanks to the introduction of deep learning and its power in AI modeling. However, the state-of-the-art vision-based AI algorithms in this field can hardly work under the challenges associated with in-bed human behavior monitoring, which includes significant illumination changes (e.g. full darkness at night), heavy occlusion (e.g. covered by a sheet or a blanket), as well as privacy concerns that mitigate large-scale data collection, necessary for any AI model training.

Theoretically, estimating human pose from covered labeled cases to unlabeled ones can be deemed as a domain adaptation problem. For the human pose estimation problem although multiple existing datasets, yet they are mainly RGB images from daily activities, which have huge domain shifts with our target problem. Although the domain adaptation topic has been studied in machine learning in the last few decades, mainstream algorithms mainly focus on the classification problem instead of a regression task, such as pose estimation. The 2021 VIP Cup challenge is in fact a domain adaptation problem for regression with a practical application for in-bed human pose estimation, not been addressed before.

In this 2021 VIP Cup challenge, we seek computer vision-based solutions for in-bed pose estimation under the covers, where no annotations are available for covered cases during model training, while the contestants have access to the large amounts of labeled data in no-cover cases. The successful completion of this task enables the in-bed behavior monitoring technologies to work on novel subjects and environments, where no prior training data is accessible. For more information about the competition please visit: IEEE VIP Cup 2021

Prize

- The Champion: $5,000

- The 1st Runner-up: $2,500

- The 2nd Runner-up: $1,500

Eligibility Criteria

Each team must be composed of: (i) One faculty member (the Supervisor); (ii) At most one graduate student (the Tutor), and; (iii) At least 3 but no more than 10 undergraduates. At least three of the undergraduate team members must be either IEEE Signal Processing Society (SPS) members or SPS student members.

Important Dates

- May 17, 2021 - Competition guidelines and Training + Validation Sets released

- July 15, 2021 - Test Set 1 released and the submission guidelines for the evaluation

- July 30, 2021 - Submission deadline for evaluation (test results, formal report, and code)

- August 30, 2021 - Finalists (best three teams) announced

- September 19, 2021 - Competition on Test Set 2 at ICIP 2021

Register

- Registration Link - Coming Soon!

Organizing Committee

Augmented Cognition Lab at Northeastern University.

- Sarah Ostadabbas (ostdabbas@ece.neu.edu)

- Shuangjun Liu (shuliu@ece.neu.edu)

- Xiaofei Huang (xhuang@ece.neu.edu)

- Nihang Fu (nihang@ece.neu.edu)

Finalist Teams

Grand Prize - Team Name: Samaritan

University: Bangladesh University of Engineering and Technology

Supervisor: Mohammad Ariful Haque

Students:

Sawradip Saha, Sanjay Acharjee, Aurick Das, Shahruk Hossain, Shahriar Kabir

First Runner-Up - Team Name: PolyUTS

University: The Hong Kong Polytechnic University & University of Technology Sydney

Supervisor: Kin-Man Lam

Tutor: Tianshan Liu

Students:

Zi Heng Chi, Shao Zhi Wang, Chun Tzu Chang,

Xin Yue Li, Akshay Holkar, Samantha Pronger, Md Islam

Second Runner-Up - Team Name: NFPUndercover

University: University of Moratuwa

Supervisor: Chamira Edussooriya

Tutor: Ashwin De Silva

Students:

Jathurshan Pradeepkumar, Udith Haputhanthri,

Mohamed Afham Mohamed Aflal, Mithunjha Anandakumar