SSP-APPL

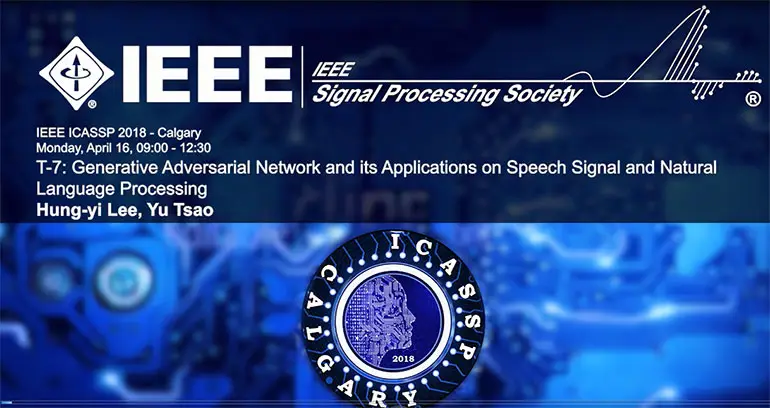

Generative Adversarial Network and Its Applications on Speech Signal and Natural Language Processing

Representation, Extraction, and Visualization of Speech Information

Cognitive Assistance for the Blind

IEEE Signal Processing Society publications, tools, and author resources. Learn more.

Upcoming events, deadlines, and planning resources. Learn more.

Signal processing education and professional development program for all career levels. Learn more.

Learn about SPS membership, Member Programs, Technical Committees, and access shared tools and support. Learn more.

The IEEE Signal Processing Society is dedicated to supporting the professional growth and career advancement of its members in the dynamic field of signal processing. Learn More

IEEE Signal Processing Society publications, tools, and author resources. Learn more.

Resources, tools, and support for SPS volunteer leaders. Learn more.

SPS Members

$0.00

IEEE Members

$11.00

Non-members

$15.00

SPS Members

$0.00

IEEE Members

$11.00

Non-members

$15.00

SPS Members

$0.00

IEEE Members

$11.00

Non-members

$15.00

SPS Members

$0.00

IEEE Members

$11.00

Non-members

$15.00

SPS Members

$0.00

IEEE Members

$11.00

Non-members

$15.00

SPS Members

$0.00

IEEE Members

$11.00

Non-members

$15.00

SPS Members

$0.00

IEEE Members

$0.00

Non-members

$0.00

SPS Members

$0.00

IEEE Members

$0.00

Non-members

$0.00

@ieeeSPS

11.3K subscribers‧180 videos

IEEE Signal Processing Society publications, tools, and author resources. Learn more.

Upcoming events, deadlines, and planning resources. Learn more.

Signal processing education and professional development program for all career levels. Learn more.

Learn about SPS membership, Member Programs, Technical Committees, and access shared tools and support. Learn more.

IEEE Signal Processing Society publications, tools, and author resources. Learn more.

Resources, tools, and support for SPS volunteer leaders. Learn more.

The IEEE Signal Processing Society is dedicated to supporting the professional growth and career advancement of its members in the dynamic field of signal processing. Learn More

Signal processing education and professional development program for all career levels. Learn more.