Contributed by Seok-Hwan Park, Seongah Jeong, Jinyeop Na, Osvaldo Simeone and Shlomo Shamai, based on the IEEEXplore® article, “Collaborative Cloud and Edge Mobile Computing in C-RAN Systems with Minimal End-to-End Latency”, published in the IEEE Transactions on Signal and Information Processing over Networks, 2021.

Collaborative Cloud-Edge Computing

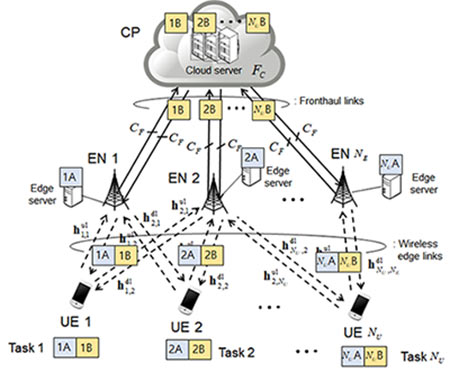

Mobile cloud and edge computing techniques enable computationally heavy applications such as gaming and augmented reality (AR) by offloading computation tasks from battery-limited mobile user equipments (UEs) to cloud and edge servers, which are located respectively at cloud processor (CP) or edge nodes (ENs) of a cellular architecture [1][2]. Cloud and edge computing systems have different target applications. For example, cloud computing is suited for processing latency-insensitive and computationally heavy tasks. Instead, the low and moderate-complexity tasks with the stringent latency requirements can be processed by edge computing. To handle the various types of tasks in the upcoming cellular services, we can design the system with both cloud and edge computing capabilities, where the computational tasks can be partially offloaded to the ENs and the CP [3][4]. Fig. 1 illustrates an example of the collaborative cloud-edge computing system. The task of each UE is split into two subtasks, which are collaboratively processed by the nearby EN and the CP. This can allow for a potential of outperforming the conventional offloading schemes that rely only on edge computing or cloud computing, provided that the task splitting ratios are optimized.

Figure 1. Collaborative cloud-edge computing scheme within a C-RAN architecture.

Collaborative Computing within a C-RAN Architecture

Computation offloading needs to be designed with considering constraints imposed by the Radio Access Network (RAN) such as, e.g., end-to-end latency that encompasses the contributions of both communication and computation. The joint optimization of communication and the computational resources for the collaborative cloud-edge computing scheme was studied in [3] with the goal of minimizing the end-to-end latency. The reference [3] focused on a distributed RAN (D-RAN) architecture, in which the ENs perform the local signal processing for channel encoding and decoding. Accordingly, the overall latency performance can be degraded by interference in dense networks. In [4], it was proposed to integrate the collaborative fractional cloud-edge offloading within a cloud RAN (C-RAN) architecture [5], while accounting for the contributions of both uplink and downlink. In a C-RAN, the joint signal processing in the form of cooperative precoding and detection at the CP enables the effective interference management. It is challenging to jointly optimize communication and computational resources within C-RAN, since the design space includes fronthaul compression and cooperative beamforming strategies, which are characterized by the large-dimensional complex matrices. In [5], the problem of minimizing the two-way end-to-end latency was tackled by adopting the matrix fractional programming (FP)-based algorithm [6].

Performance Assessment

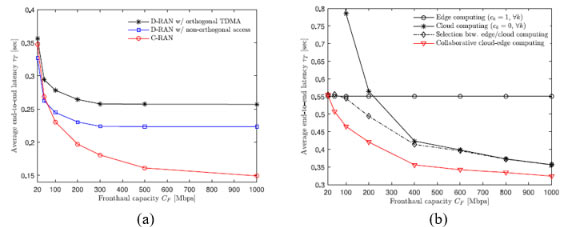

Figure 2. Assessment of optimized end-to-end latency of collaborative cloud-edge computing

system within C-RAN architecture [4]: (a) Comparison between D-RAN and C-RAN architecture;

(b) Comparison among edge computing, cloud computing, and collaborative cloud-edge computing systems.

In Fig. 2-(a), we compare the minimal end-to-end latency of collaborative cloud-edge computing scheme with D-RAN and C-RAN architectures. For D-RAN, we consider both orthogonal time-division multiple access (TDMA) and non-orthogonal multiple access (NOMA) techniques. Fig. 2-(a) shows that the deployment of C-RAN architecture is more advantageous when the fronthaul links have the sufficient capacity. This is because it enables more effective interference management by means of centralized encoding and decoding at CP. Fig. 2-(b) compares the minimal end-to-end latency of the edge computing, cloud computing, and collaborative cloud-edge computing schemes. The edge computing scheme is operated with D-RAN architecture, while the remaining schemes operate with C-RAN. Since the edge computing does not require the cloud server and fronthaul links, its performance is not affected by the fronthaul capacity constraint. In contrast, the end-to-end latency of cloud computing and collaborative computing decreases with the increase of fronthaul capacity. It is verified via simulations that in comparison to edge or cloud computing, the selection between edge and computing schemes per each channel realization does not yield the significant benefits. On the other hand, the proposed collaborative cloud-edge computing scheme with the optimal allocation of computational and communication resources can achieve the notable performance gains, particularly in the regime of intermediate fronthaul capacity values.

References:

[1] H. T. Dinh, C. Lee, D. Niyato, and P. Wang, “A Survey of Mobile Cloud Computing: Architecture, Applications, and Approaches,” Wireless Commun. Mobile Comput., vol. 13, no. 18, pp. 1587–1611, Dec. 2013, doi: https://doi.org/10.1002/wcm.1203.

[2] P. Mach and Z. Becvar, "Mobile Edge Computing: A Survey on Architecture and Computation Offloading," in IEEE Communications Surveys & Tutorials, vol. 19, no. 3, pp. 1628-1656, thirdquarter 2017, doi: https://doi.org/10.1109/COMST.2017.2682318.

[3] J. Ren, G. Yu, Y. He, and G.Y. Li, “Collaborative Cloud and Edge Computing for Latency Minimization,” IEEE Trans. Veh. Technol., vol. 68, no. 5, pp. 5031–5044, May 2019, doi: https://doi.org/10.1109/TVT.2019.2904244.

[4] S. -H. Park, S. Jeong, J. Na, O. Simeone and S. Shamai, "Collaborative Cloud and Edge Mobile Computing in C-RAN Systems With Minimal End-to-End Latency," in IEEE Transactions on Signal and Information Processing over Networks, vol. 7, pp. 259-274, 2021, doi: https://doi.org/10.1109/TSIPN.2021.3070712.

[5] O. Simeone, A. Maeder, M. Peng, O. Sahin and W. Yu, "Cloud Radio Access Network: Virtualizing Wireless Access for Dense Heterogeneous Systems," in Journal of Communications and Networks, vol. 18, no. 2, pp. 135-149, April 2016, doi: https://doi.org/10.1109/JCN.2016.000023.

[6] K. Shen, W. Yu, L. Zhao and D. P. Palomar, "Optimization of MIMO Device-to-Device Networks via Matrix Fractional Programming: A Minorization–Maximization Approach," in IEEE/ACM Transactions on Networking, vol. 27, no. 5, pp. 2164-2177, Oct. 2019, doi: https://doi.org/10.1109/TNET.2019.2943561.