Will autonomous vehicles kill people? The answer is almost certainly yes. But, human drivers kill people too. In 2016 the U.S. had 37,461 deaths in 34,436 human-operated motor vehicle crashes, a staggering average of 102 per day, according to the National Highway Traffic Safety Administration. An additional 2,350,000 are injured or disabled. These figures are alarming, but there’s a solution on the horizon.

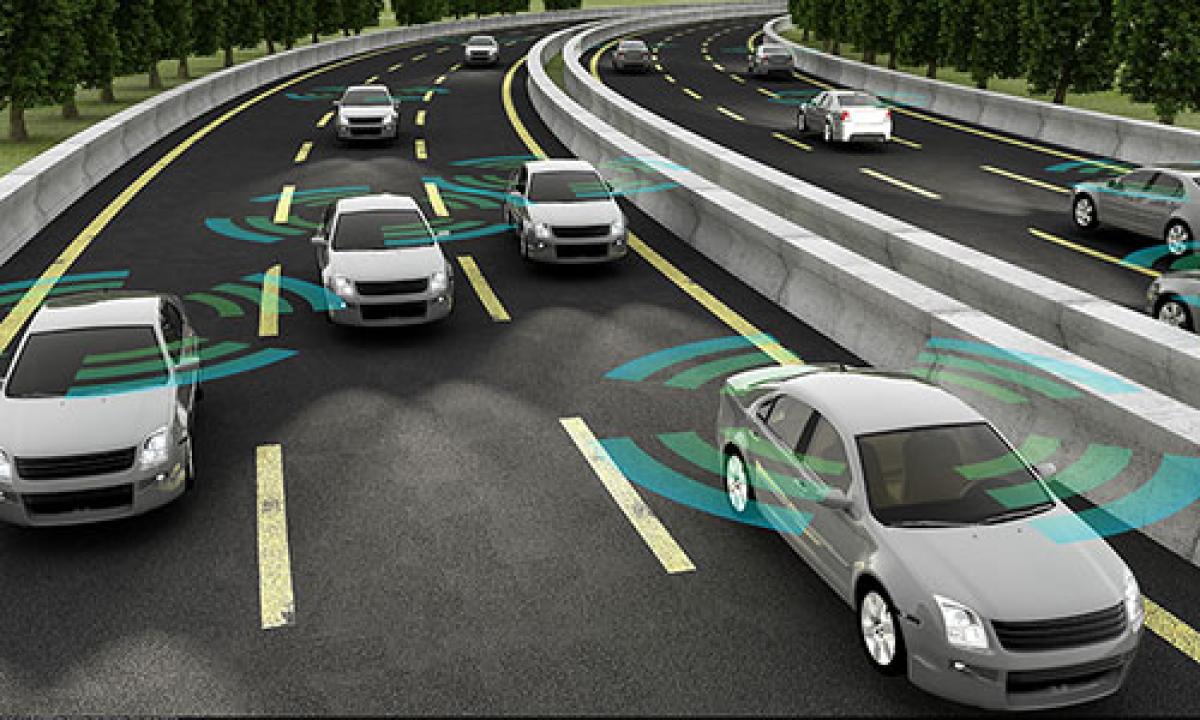

In the long term, highly or fully autonomous vehicles are expected to dramatically improve road safety. However, it may take decades for all vehicles to have that level of automation. Meanwhile, roads will be shared by human drivers and autonomous vehicles with different levels of capabilities, a scenario that is raising challenges and complexities in a prolonged transition period.

The key culprits in the crisis are distracted driving, speeding and the fact that people still fail to use their seat belts. These all can be accurately detected and effectively prevented by emerging computer vision-based driver monitoring solutions that leverage advanced machine learning algorithms.

Driver monitoring systems include potentially life-saving improvements for drivers such as fatigue, distraction, road rage and medical emergency detection. Such perception systems often employ a single near-infrared camera with active illumination so that it can clearly see the driver, even when mounted in front of the driver, behind the steering wheel or on the rearview mirror, or when the cabin is dark.

The images acquired by the camera are first analyzed in real-time to detect the driver’s face and body. This detection is done by deep learning algorithms that utilize remarkably accurate, fully convolutional neural network variants on parallel processors such as GPUs and NPUs. The detected face region is further processed, using a single neural network model that jointly aims at multiple learning tasks simultaneously, to determine if the driver is paying attention to the road. Such an AI model can track the gaze direction and head pose of the driver to assess whether the driver is aware of the potential dangers ahead. Furthermore, it can estimate the status of driver drowsiness by interpreting the dynamic statistics, such as the percentage of time that the eye pupil is covered by the eyelid, the frequency of nodding and yawning, facial expression, and the agility of saccadic eye movements.

Some of the distraction can be due to road rage, emotional stress and, of course, if the driver is engaged in a secondary activity such as texting, cellphone use, reaching out for something in the cabin, eating, smoking and many more. There are existing systems that can monitor such activities to estimate the cognitive load of the driver when it might be necessary to take control from or give it to the human operator.

Investigation of two consecutive fatalities involving autonomous vehicles that happened in the same week in March 2018 reveals both accidents could have been prevented if an active driver monitoring system had been integrated into those vehicles.

Both autonomous vehicles and human drivers cause accidents, yet only autonomous vehicles have the potential to learn from their previous mistakes – mistakes that can happen anytime, anywhere and with any autonomous vehicles in the world – in an online fashion, reinforcing what has been learned before to achieve a level of expertise and prowess that many human drivers may not match. Besides, it is rumored that some computer vision systems are even trying to invent solutions that would learn from virtually synthesized accident scenarios to keep improving themselves perpetually, even when the vehicle is not on the road.

About the Author:

Fatih Porikli is an IEEE Fellow from the Computer Society, Associate Editor of IEEE Signal Processing Society publications, and Professor of Research at the School of Engineering at the Australian National University (ANU). Dr. Porikli received his B.S. in electrical engineering from Bilkent University and his Ph.D. in electrical and computer engineering from New York University (NYU).