Contributed by Dr. Abderrahim Halimi, based on the IEEEXplore® article, “Real-Time Reconstruction of 3D Videos From Single-Photon LiDaR Data in the Presence of Obscurants” published in the IEEE Transactions on Computational Imaging in February 2023, and the SPS Webinar “Real-time, Robust and Scalable Computational Methods For Extreme 3D Lidar Imaging”, available on the SPS Resource Center.

Introduction

The quest for advancing 3D imaging capabilities, particularly in environments fraught with challenges like underwater or through obscurants, has led to significant innovations in single-photon LiDAR technology [1,2,3,4]. Single-photon LiDAR, characterized by its exceptional optical sensitivity and precise depth resolution, has shown promise for real-time 3D imaging even in conditions of extreme light scattering. The study presented in "Real-Time Reconstruction of 3D Videos From Single-Photon LiDAR Data in the Presence of Obscurants" [5] introduces a novel statistical approach designed to enhance the capabilities of 3D imaging under such demanding conditions. This methodology, leveraging a statistical model accounting for data statistics and an optimized parallel implementation, aims to significantly improve the reconstruction of 3D images, pushing the boundaries of what's possible in real-time LiDAR imaging.

Proposed Method

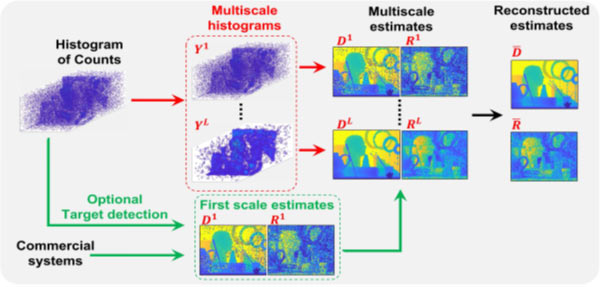

The proposed framework consists of a two-step statistical approach that focuses on real-time image reconstruction (see Fig. 1). The first step, an optional target detection method, selects informative pixels by identifying photons reflected from the target, which aids in data compression. The second step is a reconstruction algorithm that makes use of data statistics and multiscale information to generate clean depth and reflectivity images alongside associated uncertainty maps. The distinctive feature of this method lies in the adaptation of the statistical model to support parallel updates, allowing for execution on graphics processing units (GPUs) and thus facilitating real-time data analysis of moving scenes. This innovative approach can process moving scenes at rates exceeding 50 depth frames per second for 128x128 pixel images, showcasing its potential for applications requiring rapid and accurate 3D reconstructions, such as underwater imaging or navigation through obscured environments.

Fig. 1. [5] Schematic representation of the main steps of the proposed framework. The top path represents the classical way to consider multiscale information by processing histogram of counts. The bottom path shows the efficient approximation proposed in this article.

Experimental Results

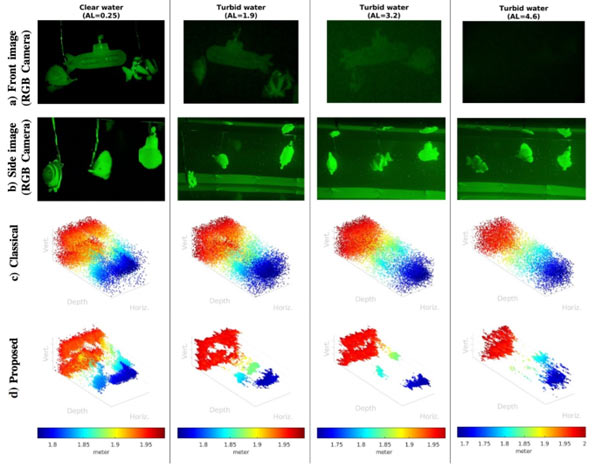

The methodology was rigorously tested against simulated and real underwater data, proving its superiority over existing algorithms (e.g., [4, 6]). Notably, it demonstrated an ability to deliver fast and robust depth estimation, outperforming state-of-the-art algorithms in speed and accuracy. The real-time processing capability was notably highlighted, with the method successfully handling data processing speeds allowing for more than 50 depth frames per second for 128x128 pixel images. This performance is critical for applications in dynamic environments where speed and accuracy are paramount. Additionally, the approach was evaluated using real underwater imaging scenarios, including imaging in turbid waters with varying levels of obscurants (see Fig. 2). The results underscored the method's robustness and efficiency, offering promising implications for real-world applications that demand high-speed, accurate 3D imaging under challenging conditions.

Fig. 2. [5] Results of 3D reconstruction by the proposed algorithm using 64 binary frames in each of the 4 different attenuated water scenarios (columns). From left to right: increasing obscurant levels. (a)–(b) shows the image of the processed frame of the front and side RGB cameras respectively, (c) shows the results of the classical maximum likelihood algorithm, (d) shows the result of the proposed algorithm.

Conclusion

The proposed statistical-based framework for real-time 3D video reconstruction from single-photon LiDAR data marks a significant advancement in imaging technology. Its ability to provide fast, accurate, and reliable 3D reconstructions in environments with extreme scattering and obscurants opens up new avenues for exploration and application in fields ranging from underwater research to autonomous vehicle navigation and beyond. The method's innovative use of GPU parallel processing for real-time analysis sets a new standard for 3D imaging technology, promising to revolutionize how we capture and understand the world in three dimensions.

Code: The code is available on GitHub.

Note on Cover Image: The image was created with the use of ChatGPT.

[Click image below to view full image]

References:

[1] A. M. Wallace, A. Halimi, and G. S. Buller, “Full waveform LiDAR for adverse weather conditions,” IEEE Transactions Vehicular Technology, vol. 69, no. 7, pp. 7064–7077, Jul. 2020, https://dx.doi.org/10.1109/TVT.2020.2989148.

[2] J. Rapp, J. Tachella, Y. Altmann, S. McLaughlin, and V. K. Goyal, “Advances in single-photon LiDAR for autonomous vehicles: Working principles, challenges, and recent advances,” IEEE Signal Processing Magazine, vol. 37, no. 4, pp. 62–71, Jul. 2020, https://dx.doi.org/10.1109/MSP.2020.2983772.

[3] G. Satat, M. Tancik, and R. Raskar, “Towards photography through realistic fog,” in IEEE International Conference on Computational Photography (ICCP), 2018, pp. 1–10, https://dx.doi.org/10.1109/ICCPHOT.2018.8368463.

[4] A. Halimi, A. Maccarone, R. A. Lamb, G. S. Buller, and S. McLaughlin, “Robust and guided Bayesian reconstruction of single-photon 3D LiDAR data: Application to multispectral and underwater imaging,” IEEE Transactions Computational Imaging, vol. 7, pp. 961–974, 2021, https://dx.doi.org/10.1109/TCI.2021.3111572.

[5] S. Plosz, A. Maccarone, S. McLaughlin, G. S. Buller and A. Halimi, "Real-Time Reconstruction of 3D Videos From Single-Photon LiDaR Data in the Presence of Obscurants," in IEEE Transactions on Computational Imaging, vol. 9, pp. 106-119, 2023, https://dx.doi.org/10.1109/TCI.2023.3241547.

[6] D. B. Lindell, M. O’Toole, and G. Wetzstein, “Single-photon 3D imaging with deep sensor fusion,” ACM Transactions on Graphics, vol. 37, no. 4, Jul. 2018, Art. no. 113, https://doi.org/10.1145/3197517.3201316.