What Makes Big Data “Big”? The Five V’s to Understanding Its Volume

Top Reasons to Join SPS Today!

1. IEEE Signal Processing Magazine

2. Signal Processing Digital Library*

3. Inside Signal Processing Newsletter

4. SPS Resource Center

5. Career advancement & recognition

6. Discounts on conferences and publications

7. Professional networking

8. Communities for students, young professionals, and women

9. Volunteer opportunities

10. Coming soon! PDH/CEU credits

Click here to learn more.

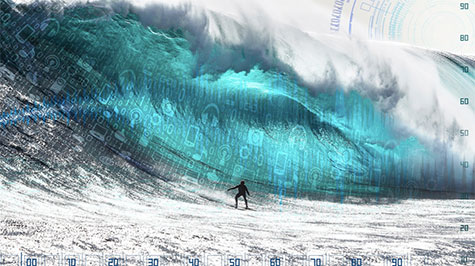

What Makes Big Data “Big”? The Five V’s to Understanding Its Volume

Have you encountered big data yet? Chances are you have. From Facebook to Twitter, from Google searches to YouTube videos, from bank statements to utility bills and shopping discount cards, big data is everywhere. But to understand its significance, we must first explore what data is, what makes it “big,” and how it ties to signal processing.

Some say data is anything digital that can be stored on a computer, but I would argue that data is anything that was recorded and can be moved around – the epitome of what it means for data to be “big.” Think, for example, about documents written on animal skins in ancient Egypt as early as 2500 B.C. These writings allowed information to be preserved, delivered and shared – even though copying and correcting such data was limited during that time. But was it also “big”? The famous Royal Library of Alexandria – established in the third century B.C. – was no doubt perceived as being very “big.” It could be considered among the largest collection of documents at the time. So why have we not discussed the idea of data being “big” until only recently?

The reasons our attitudes changed toward big data can be clearly identified. The foremost is the proliferation of information and communication technologies (ICT) that digitalize the world we live in. It is easy to store digital information forever, move it reliably over any distance in no time, and share it in many places at the same time. The second reason is that we now generate data at unprecedented volumes and speeds. In fact, the vast majority of all existing data has been only produced in recent years. Digitalization is probably most noticeable in the digital media industry as most people are buying books and music as digital files without even walking outside of their homes. But this is actually just a small part of big data (e.g., all music from around the world can be stored on a single inexpensive hard drive). Much more data will be generated by sensors fueling the soon-to-be internet of things (IoT) in order to make our homes and cities smart(er) and more efficient (e.g., going beyond energy). For example, engineers use mathematical models – combined with signal processing of data – to make accurate and instantaneous predictions of electricity or traffic capacity demands in a city, to match it with the corresponding supply of sufficient resources.

So how do we know what is “big” in big data? Scientists and engineers think about big data using five V's:

- Volume: How many bytes, or better, terabytes

- Velocity: How quickly data is generated

- Value: Ability to turn data into benefits

- Veracity: The accuracy and credibility of the data

- Variety: How much data is or is not structured

There are also other questions to be answered regarding big data except these five V’s. For example: Why does big data matter? How do we harness its benefits? How do we ensure privacy and security while using it? It turns out that answering these questions is no easy task, so it brings about the importance of data scientists. They themselves need to collaborate closely with the domain specialists (e.g., in healthcare, transportation, manufacturing, etc.) to improve decisions, increase the efficiency of using big data and reduce the risks of using big data for businesses.

The truth is that big data is really “big” and it can be generated from endless data streams from trillions of sources of very different types. Consequently, the technology for storing and processing big data must operate at enormous scales and limits given by the five V's of data. Only a handful of vendors are capable of providing the required data infrastructures with adequate data processing power and storage space. Equally as important as technology are the algorithms used to accurately process such huge volumes of data at required speeds. The algorithms are a product of the human brain, and it is where data scientists and other experts in signal processing and machine learning make their living. An example of an area where big data (and its processing) is now making a big difference is the research in living systems from understanding social behavior of animals to making new discoveries about the molecular processes inside the biological cells. Sometimes we want algorithms to process incoming data in real time (e.g., instantaneously turning clicks on Amazon’s website into recommendations); in other cases, we prefer an accurate answer, even if getting this answer may take longer (e.g., answering queries by the internet search engines).

What will the future of big data look like? We will no doubt do a lot of good things with big data, despite still being in the learning phase. In any case, future consumers are likely to be empowered by big data and its impact cannot be stopped.

About Pavel Loskot

Dr. Loskot is currently a senior engineering lecturer at Swansea University in Wales, U.K. He has gained long-term work, educational and cultural experience from the Czech Republic, Finland, Canada, the UK and China, and has an extensive portfolio of academic and industrial collaborative projects with a diverse range of institutions. Connect with Dr. Loskot on LinkedIn or send him an email at p.loskot@swansea.ac.uk.

SPS Social Media

- IEEE SPS Facebook Page https://www.facebook.com/ieeeSPS

- IEEE SPS X Page https://x.com/IEEEsps

- IEEE SPS Instagram Page https://www.instagram.com/ieeesps/?hl=en

- IEEE SPS LinkedIn Page https://www.linkedin.com/company/ieeesps/

- IEEE SPS YouTube Channel https://www.youtube.com/ieeeSPS