Reviewer Instructions for OJSP Reproducibility Reviews

Top Reasons to Join SPS Today!

1. IEEE Signal Processing Magazine

2. Signal Processing Digital Library*

3. Inside Signal Processing Newsletter

4. SPS Resource Center

5. Career advancement & recognition

6. Discounts on conferences and publications

7. Professional networking

8. Communities for students, young professionals, and women

9. Volunteer opportunities

10. Coming soon! PDH/CEU credits

Click here to learn more.

Reviewer Instructions for OJSP Reproducibility Reviews

Context

OJSP has initiated an enhanced reproducibility initiative for learning-based papers, i.e., papers where the primary results are obtained using a trained computational model, such as a deep neural network or a dictionary representation. For such submissions to OJSP, authors are required to submit a Code Ocean capsule that allows the computational results to be reproduced. Additional information can be found in the "Author Instructions for OJSP Reproducibility Review", on this page.

Reproducibility reviews are conducted once the manuscript has already been found acceptable via a conventional technical review of the manuscript contents. Reproducibility reviews are intended to facilitate validation and replication of the results presented in the paper using the author-provided Code Ocean capsule, and not as an additional review of the manuscript contents.

Overview

The goal of the reproducibility review is to perform a good faith validation of the OJSP manuscript’s computational results by using the capsule provided by the author on the Code Ocean computational platform. For the manuscript to pass the reproducibility review, the results obtained from the Code Ocean capsule should be representative of those presented in the manuscript, and consistent with reported performance metrics and claims asserted regarding the proposed method. Ideally, the Code Ocean capsule should reproduce all computational results from the manuscript for the proposed method(s), generating plots and table entries matching those in the manuscript. However, this may not always be feasible, for example, due to distribution restrictions on part of the data used in computing these results, a large number of ablation studies, etc. Reviewers should therefore exercise their judgment when making their assessments. Example cases included below provide guidance for some common situations that may be encountered.

Instructions

Upon assignment of a manuscript, reproducibility reviewers will receive an email providing a private review link via which they can access the author-provided capsule from their Code Ocean account (which they can create if they do not already have one). The link is private, and the reviewer identity will not be visible to the authors or other reviewers for the manuscript. Upon accessing the link, the reviewer’s Code Ocean account will also be credited with 100 hours of run-time to allow them to complete the assessment.

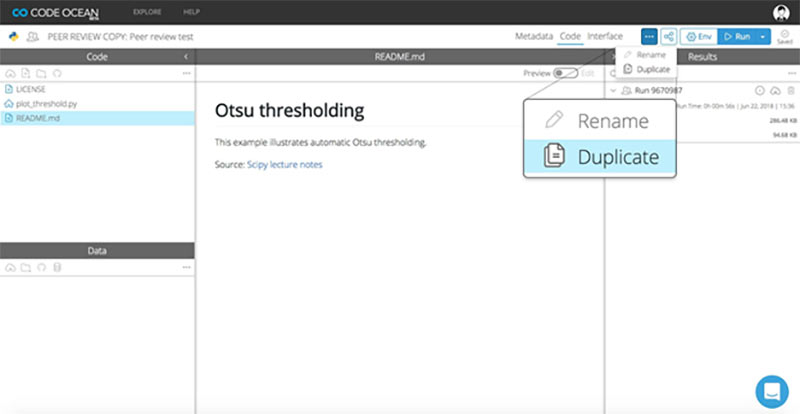

As a reproducibility reviewer, you will need to duplicate the entire compute capsule to your personal dashboard on Code Ocean, at which point you’ll be able to edit and run it, keeping track of any changes you make and results you generate. See detailed step-by-step instructions below. You have one week to complete your assessment and provide your report.

Your review report should be submitted through the journal review management system using the link provided in the reviewer assignment email. The review report should provide a summary assessment of whether or not the author-provided Code Ocean module substantiates and validates the results presented in the manuscript. Justification for the assessment should also be included in the report. We strongly encourage you to experiment with the Code Ocean capsule to explore alternative scenarios. For instance, if the code operates on input data, we strongly encourage you to also try alternative inputs, including, when possible, data other than those in the author-provided capsule. Note, however, that the reproducibility review is not intended to be an adversarial attempt to find unusual conditions under which the code fails. The process for creation of a Code Ocean module should ensure that the code runs without errors, but if you encounter any issues, please include them in your report. If you have suggestions for improving the code, or would like to recommend minor edits to the manuscript to improve the presentation, please also include these in your review report for the consideration of the Associate Editor.

When entering your research reproducibility reviews on the ScholarOne system that OJSP uses for managing its review processes, you may see the a general manuscript review form rather than one set up specifically for research reproducibility reviews. Several of the fields in the review form may therefore not be applicable. Please note that we will use two of the sections from the form: your "Comments to the Author" (to be conveyed anonymously to the authors, for example, for any potential improvement of their Code Ocean module), and your "Confidential Comments to the Associate Editor."

Confidentiality and Conflicts of Interest (Important)

Materials provided to you for the reproducibility review (i.e. the manuscript, the code, and any comments from the Associate Editor) are confidential . Do not share the private review link for the compute capsule or any of the other materials with anyone else, and do not disclose information about the peer review process or associated materials. Do not contact the manuscript authors under any circumstances. If you have any questions, please contact the OJSP administrator (Rebecca Wollman, r.wollman@ieee.org) or the OJSP Editor-in-Chief (Brendt Wohlberg, ojsp-eic@ieee.org), who can act as intermediaries to facilitate anonymous communication with the authors.

If your service as a reviewer for a paper would create a real or perceived conflict of interest, please immediately inform the OJSP administrator, Rebecca Wollman r.wollman@ieee.org, so that the manuscript can be assigned to a different reviewer.

Reviewing Credit

Reproducibility reviewers will be acknowledged as reviewers for OJSP in the same manner as other manuscript reviewers, and will receive credit for reviewing on Publons, should they choose to do so.

Example Cases and Associated Guidance for Assessments

While by no means exhaustive, the different cases presented below highlight some situations that may be commonly encountered.G eneralizations/extensions of these should be readily apparent, and reproducibility reviewers should exercise such common sense when performing their assessments. In situations where there are questions, please feel free to reach out to the OJSP Research Reproducibility Editor (ojsp-rre@ieee.org) or the EIC (ojsp-eic@ieee.org).

Case 1: Manuscript A proposes a deep learning based approach for audio source separation where the model is trained and evaluated on publicly available datasets. The author-provided Code Ocean capsule includes the final trained model and sample input data on which the code uses the model to obtain sample results. Numerical accuracy metrics for the accuracy are also computed by the code over the small input sample. As the reproducibility reviewer, you should attempt to not only run the code on the sample inputs provided but also on other publicly available samples for which ground truth is available for evaluation. If you repeat the experiment, on the test dataset used in the manuscript, be aware, however, that hardware differences between the platforms used by the authors and the Code Ocean platform and randomization inherently used in some models, may contribute to small differences in computed numerical metrics. The reproducibility should be called into question only if there are significant differences between the results reported in the manuscript and your evaluation (on the same test dataset). Small differences can be noted, but should not be a basis for a recommendation to reject due to a lack of reproducibility.

Case 2: Manuscript B proposes a deep learning based approach for segmentation of infra-red images. The training and evaluation of the approach uses a proprietary dataset that is not publicly available. The manuscript presents individual segmentation maps for a small subset of images from the dataset, and a numerical evaluation on the larger dataset. You can base your assessment on the smaller subset of images for which individual segmentation maps are provided. Because the authors have included these individual images and the corresponding segmentation maps in their manuscript, they should also be able to include these in the Code Ocean capsule along with their trained model. Numerical metrics for evaluating the segmentation accuracy can also be computed over this smaller dataset and should not be dramatically inconsistent with the numerical metrics reported over the complete dataset in the manuscript. Additionally, if you have access to other infra-red images from publicly accessible datasets, you can also explore the performance of the code on these and use the results to inform your assessment.

Case 3: Manuscript C proposes a new architecture for deep learning based natural speech recognition. In addition to evaluation of the approach on a publicly available speech benchmark dataset, the contribution of the individual architectural modifications to the performance of the proposed method is also empirically evaluated through a series of extensive ablation studies. The author-provided Code Ocean capsule provides the model for the (final) proposed architecture but not for each of the ablation studies. In this case, the fact that you cannot validate the results for the ablation studies using the author-provided capsule, should not be considered a reason for summarily dismissing it for not reproducing the results from the manuscript. The main results are for the proposed architecture and these can be validated on the public benchmark dataset using the author provided capsule, and such validation and publication of reviewed code significantly improves upon the predominant current practice. Additionally, you may perform experiments with other publicly available samples of natural speech for your assessment.

Case 4: Manuscript D proposes a deep-learning based approach for identifying malignant regions in lung X-ray images. Because of the specialized expertise required and the tedium of labeling, only a small set of 15 ground truth images is available with pixel-wise labeling of malignant and non-malignant regions. The evaluation protocol in the manuscript uses a leave-one-out cross-validation approach where 15 different deep neural network models are trained on each of the selections of 14 images (from the 15 total) and tested on the image not used for training. The manuscript presents example results for three of the images and reports numerical values for the average error obtained for the cross validation based evaluation, which ensures that the evaluation image is not part of the set used for training. Because each model is quite large, the author-provided Code Ocean capsule provides one of the 15 models from the cross-validation protocol and the 15 images, and their corresponding labeled ground truth data. The module also computes the average error for the provided model over the 15 images but reported values do not exactly match those in the manuscript. In this situation, the numerical discrepancy between the values computed by the model and those reported in the manuscript, by itself, does not constitute a reason for recommending a rejection for lack of reproducibility. The visual results on the image not included in the training of the provided model, should match those presented in the manuscript for the same image and the numerical error should be consistent with what can be expected with the cross-validation (usually, smaller over the full set of 15 images compared to the cross-validation error).

Case 5: Manuscript E presents a dictionary learning approach for image super-resolution that is evaluated over a publicly available test dataset. The manuscript presents sample visual results for four images and numerical evaluation metrics over the entire test dataset. The author-provided Code-Ocean capsule generates the visual results for four images but does not provide any numerical evaluation metrics. In this scenario, you should first bring the issue to the attention of the OJSP Research Reproducibility Editor and the EIC (found on the Editorial Board page), so that they may contact the authors to update their module to provide the required functionality before you complete your assessment and provide your report. You should recommend that the manuscript be rejected for lack of reproducibility, if the authors fail to update the module and do not provide a reasonable justification as to why the Code Ocean capsule they provide cannot also compute the numerical accuracy metrics that they report in their manuscript.

Detailed Instructions for Code Ocean

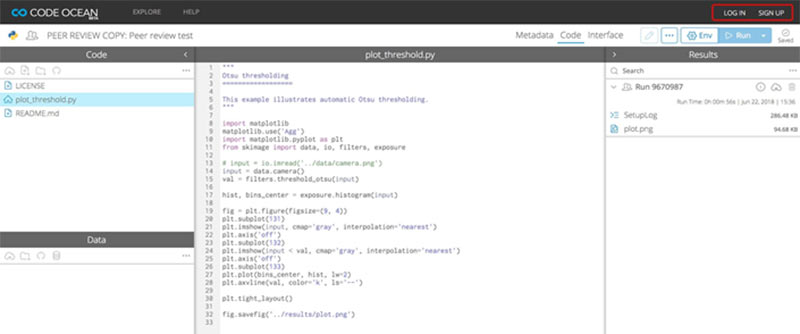

Step 1. Click on the private review link that you have received. This will take you to the Code Ocean code page, where you can view the code, data, environment and the results generated.

Step 2. If you wish to run the code, you will need to log in or sign up for Code Ocean.

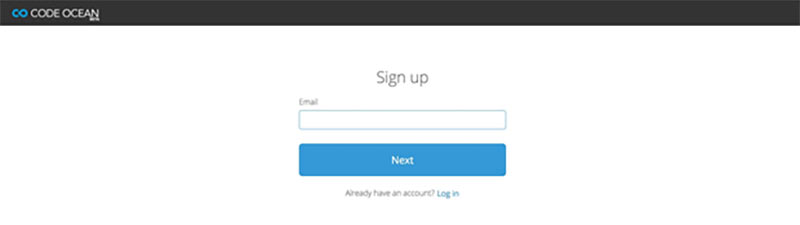

Step 3. If you do not have an account, you will be prompted to sign up for an account.

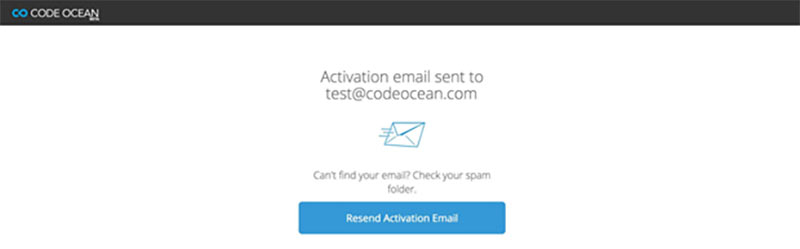

Step 4. You will need to confirm your email address.

Step 5. You will then be redirected back to the compute capsule. The compute capsule will be in “view only” mode. You will be able to view (or download) the code, data, and results; however, you will need to duplicate the compute capsule to run and/or make changes. The “view only” version will remain untouched, so you can compare any changes you have made or results you have generated in the duplicate copy. Any changes you make to the code, data and results on the duplicate copy will only be visible to you.

SPS Social Media

- IEEE SPS Facebook Page https://www.facebook.com/ieeeSPS

- IEEE SPS X Page https://x.com/IEEEsps

- IEEE SPS Instagram Page https://www.instagram.com/ieeesps/?hl=en

- IEEE SPS LinkedIn Page https://www.linkedin.com/company/ieeesps/

- IEEE SPS YouTube Channel https://www.youtube.com/ieeeSPS