Contributed by Dr. Jiabei Zeng, based on two IEEEXplore® articles, Occlusion Aware Facial Expression Recognition Using CNN With Attention Mechanism and Learning Representations for Facial Actions From Unlabeled Videos.

Facial expression is a form of nonverbal communication, serving as a primary means of conveying social information between humans. It is also highly related to humans’ emotion, mental states, and intentions. Facial expression recognition (FER) has received significant interest from computer scientists and psychologists over recent decades. Although great progress has been made, facial expression recognition in the wild remains challenging due to various unconstrained conditions, e.g., occlusion, various poses.

To address the unconstrained condition issue, we are inspired by the fact that facial expression are configurations of different muscle movements in the face. The local characters of muscle movements play an important role in distinguishing facial expression by machines. To this end, we explore the local characters of facial expressions by introducing the attention mechanism in both supervised and unsupervised manners.

Attention mechanism for occlusion-aware facial expression

For human beings, even though the faces would be partially occluded, people could recognize the facial expression according to the un-blocked facial regions. For example, when some regions of the face are blocked (e.g., the lower left cheek), human may judge the expression according to the symmetric part of face (e.g., the lower right cheek), or other highly related facial regions (e.g., regions around the eyes or mouth).

Inspired by the intuition, we propose a supervised convolution neural network with attention mechanism to recognize the facial expression with partially occluded faces. Through the attention module, we learn the weights for different facial regions. Then, we can perceive the occluded regions of the face and focus on the most discriminative un-occluded regions.

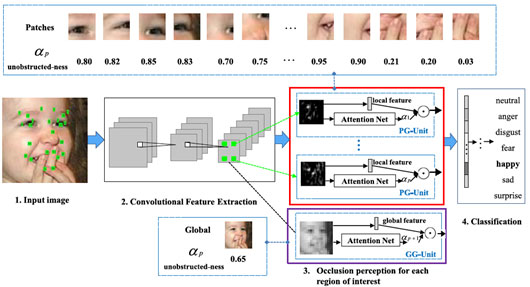

Fig. 1. illustrate the end-to-end learning framework of the proposed ACNN [1]. ACNN takes a facial image as input. The image is fed into a convolutional net and is represented as some feature maps. Then, ACNN decomposes the feature maps of the whole face into multiple sub-feature maps, which represent different facial regions of interest (ROIs). Each representation is weighed via a proposed Patch-Gated Unit (PG-Unit) that computes an adaptive weight from the region itself according to the unobstructedness and importance. As can be seen in Fig. 1, the last three visualized patches are blocked by the baby’s hand and thus they have low unobstructed-ness (ap). Besides the weighed local representations, the feature maps of the whole face are encoded as a weighed vector by a Global-Gated Unit (GG-Unit). Finally, the weighed global facial features with local representations are concatenated and serve as a representation of the occluded face. Two fully connected layers are followed to classify the face to one of the emotional categories. ACNNs are optimized by minimizing the softmax loss.

The proposed ACNN is an early attempt to successfully address the facial occlusions by attention mechanism. It was evaluated on both real and synthetic occlusions, including a self-collected facial expression dataset with real-world occlusions, the two largest in-the-wild facial expression datasets (RAF-DB and AffectNet) and their modifications with synthesized facial occlusions. Experimental results show that ACNN improves the recognition accuracy on both the non-occluded faces and occluded faces. Visualization results demonstrate that, compared with the CNN without Gate Unit, ACNNs are capable of shifting the attention from the occluded patches to other related but unobstructed ones. Due to ACNN’s effectiveness, some other works [2], [3] extend ACNN to address the occlusion issues in facial expression analysis.

Attention mechanism works in disentangling facial actions and head poses

Considering that facial expression are configurations of different muscle movements in the face and the movements are easy to be detected without manual annotations, we manage to use the movements as the supervisory signals in learning the facial action representations. However, the detected movements might be induced by both facial actions and head motions. In some cases, especially in uncontrolled scenarios, head motions are the dominant contributors to the movements. If we do not remove the movements of head motions from the supervisory signals, the learned features would not be discriminative enough to describe facial actions and expressions.

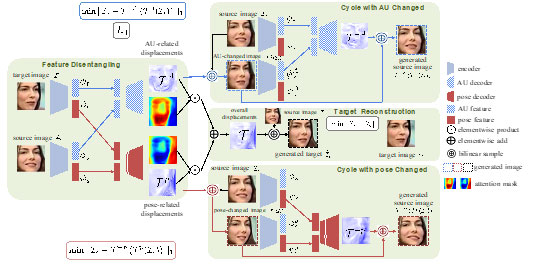

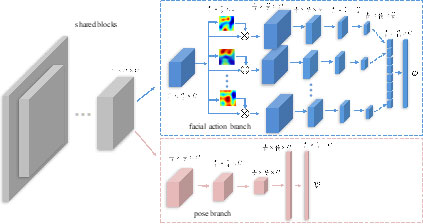

To distinguish the movement induced by facial actions from those by head motion, we design a region perceptive encoder with multiple attention masks to extract the facial action features [4]. Figure 2 illustrates the framework of the self-supervised facial action representation learning framework, where the attention mechanism is embedded in the encoder. It consists of four parts: feature encoding and decoding, cycle with facial actions/pose changed and target reconstruction. In feature encoding and decoding, we encode the input images as features and then decode the features into two-pixel displacements that represent the movements induced by facial actions and head motions between two facial images, respectively. We introduce attention mechanism into the encoder to capture the local facial features. Structure of the encoder is shown in Fig. 3. Through the attention module in the encoder, we are able to discover the discriminative facial regions in an unsupervised manner and to achieve self-supervised representation that improves the performance of facial action unit detection. To make the separated movements meaningful in the physical world, in the two cycles, we control the quality of the three generated faces by maintaining the consistency between the real images and the generated images. In target reconstruction, we integrate the separated movements and uses it in transforming the source image to the target one, ensuring that the two movements are sufficient to represent the changing between the two face images.

The proposed self-supervised method is an early attempt to discover the discriminative facial regions in an unsupervised manner. It is also an early attempt to learn facial action representations that are disentangled with head motions. The proposed method is comparable to state-of-the-art facial action unit detection methods on four widely used benchmarks.

The effectiveness of the two works indicates that facial regions are crucial for facial expression analysis. We first explore pre-defined facial regions to address facial expression recognition with partially occluded faces. Then, we discover facial action related regions without annotations in a self-supervised learning framework. We believe that facial expression analysis could be further improved by introducing state-of-the-art attention mechanism.

References:

[1] Yong Li, Jiabei Zeng, Shiguang Shan, Xilin Chen. Occlusion Aware Facial Expression Recognition Using CNN With Attention Mechanism. IEEE Transactions on Image Processing, 28(5), 2439-2450, 2019, DOI: 10.1109/TIP.2018.2886767.

[2] K. Wang, X. Peng, J. Yang, D. Meng, and Y. Qiao. Region attention networks for pose and occlusion robust facial expression recognition. IEEE Transactions on Image Processing 29: 4057-4069, 2020, DOI: 10.1109/TIP.2019.2956143.

[3] Hui Ding, Peng Zhou, and Rama Chellappa. Occlusion-adaptive deep network for robust facial expression recognition. IJCB 2020.

[4] Yong Li, Jiabei Zeng, Shiguang Shan. Learning representations for facial actions from unlabeled videos. IEEE Transactions on Pattern Analysis and Machine Intelligence, 2020, DOI: 10.1109/TPAMI.2020.3011063.