Contributed by Dr. Lin Zhang, based on the IEEEXplore® article, “FSIM: A Feature Similarity Index for Image Quality Assessment”, published in the IEEE Transactions on Image Processing, 2011.

Introduction

With the rapid proliferation of digital imaging and communication technologies, image quality assessment (IQA) has been becoming an important issue in numerous applications, such as image acquisition, transmission, compression, restoration, and enhancement. Since the subjective IQA methods cannot be readily and routinely used for many scenarios, e.g., real-time and automated systems, it is necessary to de- velop objective IQA metrics to automatically and robustly measure the image quality. Meanwhile, it is anticipated that the evaluation results should be statistically consistent with those of the human observers. According to the availability of a reference image, objective IQA metrics can be classified as full reference (FR), no reference (NR), and reduced-reference methods [1]. In this paper, the discussion is confined to FR methods, where the original “distortion-free” image is known as the reference image.

The conventional metrics, such as the peak signal-to-noise ratio (PSNR) and the mean-squared error (MSE) operate directly on the intensity of the image, and they do not correlate well with the subjec- tive fidelity ratings. Thus, many efforts have been made on designing human visual system (HVS) based IQA metrics. Such kinds of models emphasize the importance of HVS’ sensitivity to different visual signals, such as the luminance, the contrast, the frequency content, and the interaction between different signal components [2]-[3]. The noise quality measure (NQM) [2] and the visual SNR (VSNR) [3] are two representatives. Methods, such as the structural similarity (SSIM) index [1], are motivated by the need to capture the loss of structure in the image. SSIM is based on the hypothesis that HVS is highly adapted to extract the structural information from the visual scene; therefore, a measurement of SSIM should provide a good approximation of perceived image quality.

The great success of SSIM and its extensions owes to the fact that HVS is adapted to the structural information in images. The visual in- formation in an image, however, is often very redundant, while the HVS understands an image mainly based on its low-level features, such as edges and zero crossings [4]-[5]. In other words, the salient low-level features convey crucial information for the HVS to interpret the scene. Accordingly, perceptible image degradations will lead to per- ceptible changes in image low-level features, and hence, a good IQA metric could be devised by comparing the low-level feature sets be- tween the reference image and the distorted image. Based on the afore- mentioned analysis, in this paper, we propose a novel low-level feature similarity (FSIM) induced FR IQA metric, namely, FSIM.

The Proposed Method

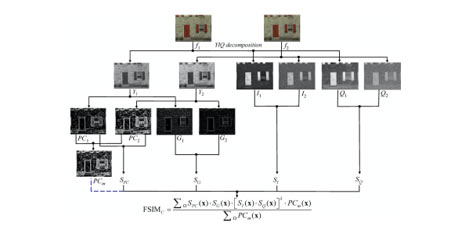

In this paper, a novel feature similarity (FSIM) index for full reference IQA is proposed based on the fact that human visual system (HVS) understands an image mainly according to its low-level features. Specifically, the phase congruency (PC), which is a dimensionless measure of the significance of a local structure, is used as the primary feature in FSIM. Considering that PC is contrast invariant while the contrast information does affect HVS’ perception of image quality, the image gradient magnitude (GM) is employed as the secondary feature in FSIM. PC and GM play complementary roles in characterizing the image local quality. After obtaining the local quality map, we use PC again as a weighting function to derive a single quality score. Extensive experiments performed on six benchmark IQA databases demonstrate that FSIM can achieve much higher consistency with the subjective evaluations than state-of-the-art IQA metrics.

Figure 1: Illustration for the FSIM/FSIMC index computation. f1 is the reference image, and f2 is a distorted version of f1.

Experiments and Results

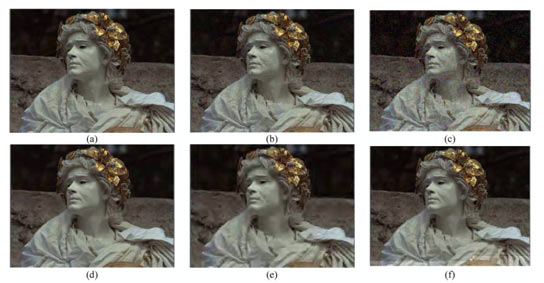

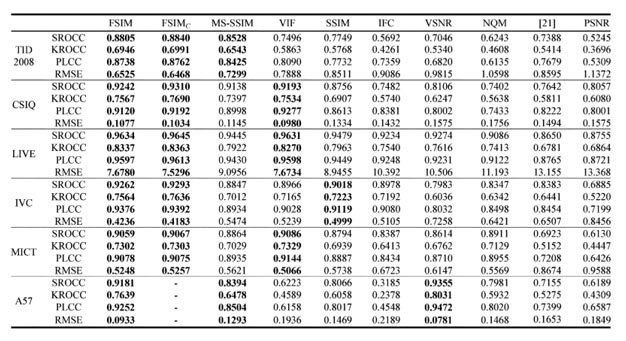

We use an example to demonstrate the effectiveness of FSIM/FSIMC in evaluating the perceptible image quality as given in Fig. 2. We compute the image quality of Fig. 2(b)-(f) using various IQA metrics, and the results are summarized in Table 1. We also list the subjective scores (extracted from TID2008) of these five images in Table 1. For each IQA metric and the subjective evaluation, higher scores mean higher image quality.

Figure 2: (a) Reference image; (b)-(f) are the distorted versions of (a) in the TID2008 database. Distortion types of (b)-(f) are “additive Gaussian noise,” “spatially correlated noise,” “image denoising,” “JPEG 2000 compression,” and “JPEG transformation errors,” respectively.

Table 1: Performance comparison of IQA metrics on six benchmark datasets

References:

[1] Z. Wang, A. C. Bovik, H. R. Sheikh, and E. P. Simoncelli, “Image quality assessment: From error visibility to structural similarity,” IEEE Trans. Image Process., vol. 13, no. 4, pp. 600-612, Apr. 2004, https://dx.doi.org/10.1109/TIP.2003.81986.

[2] N. Damera-Venkata, T. D. Kite, W. S. Geisler, B. L. Evans, and A. C. Bovik, “Image quality assessment based on a degradation model,” IEEE Trans. Image Process., vol. 9, no. 4, pp. 636-650, Apr. 2000, doi: https://dx.doi.org/10.1109/83.841940.

[3] D. M. Chandler and S. S. Hemami, “VSNR: A wavelet-based visual signal-to-noise ratio for natural images,” IEEE Trans. Image Process., vol. 16, no. 9, pp. 2284-2298, Sep. 2007, doi: https://dx.doi.org/10.1109/TIP.2007.901820.

[4] D. Marr and E. Hildreth, “Theory of edge detection,” Proc. R. Soc. Lond. B, vol. 207, no. 1167, pp. 187-217, Feb. 1980.

[5] M. C. Morrone and D. C. Burr, “Feature detection in human vision: A phase-dependent energy model,” Proc. R. Soc. Lond. B, vol. 235, no. 1280, pp. 221-245, Dec. 1988.

[6] L. Zhang, L. Zhang, X. Mou and D. Zhang, "FSIM: A Feature Similarity Index for Image Quality Assessment," in IEEE Transactions on Image Process., vol. 20, no. 8, pp. 2378-2386, Aug. 2011, doi: https://dx.doi.org/10.1109/TIP.2011.2109730.