SPS Feed

Top Reasons to Join SPS Today!

1. IEEE Signal Processing Magazine

2. Signal Processing Digital Library*

3. Inside Signal Processing Newsletter

4. SPS Resource Center

5. Career advancement & recognition

6. Discounts on conferences and publications

7. Professional networking

8. Communities for students, young professionals, and women

9. Volunteer opportunities

10. Coming soon! PDH/CEU credits

Click here to learn more.

The Latest News, Articles, and Events in Signal Processing

Manuscript Due: 28 February 2024

Publication Date: December 2024

Manuscript Due: 30 November 2023

Publication Date: October 2024

Date: 11 May 2023

Chapter: Atlanta Chapter

Chapter Chair: Wendy Newcomb

Title: Synthetic Aperture Radar (SAR) Signal Processing Challenges and Data Sets for Associated Research

Underwater image enhancement has drawn considerable attention in both image processing and underwater vision. Due to the complicated underwater environment and lighting conditions, enhancing underwater image is a challenging problem.

The Signal Processing research group at the Universität Hamburg has a 3-year opening funded by the German Research Foundation (DFG) on machine learning approaches for joint spatial-spectral multi-microphone speech enhancement.

See our webpage for details and on how to apply.

Date: 12 April 2023

Time: 3:00 PM CET

Speaker(s): Dr. Hao Zhu

Date: 12 July 2023

Time: 9:00 AM ET (New York Time)

Speaker(s): Dr. Weijie Yuan, Dr. Zhiqiang Wei, Dr. Shuangyang Li

Date: 7 July 2023

Time: 1:30 PM ET (New York Time)

Speaker(s): Dr. Kumar Vijay Mishra

Date: 30 June 2023

Time: 9:00 AM ET (New York Time)

Speaker(s): Dr. Fan Liu, Dr. Ya-Feng Liu, Dr. Christos Masouros

Date: 15-19 July 2024

Location: Niagara Falls, ON, Canada

Date: 4-7 December 2023

Location: Nürnberg, Germany

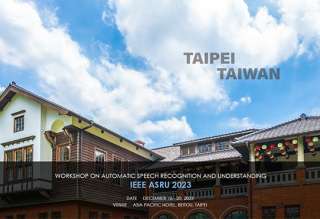

Date: 16-20 December 2023

Location: Taipei, Taiwan

Pages

SPS Social Media

- IEEE SPS Facebook Page https://www.facebook.com/ieeeSPS

- IEEE SPS X Page https://x.com/IEEEsps

- IEEE SPS Instagram Page https://www.instagram.com/ieeesps/?hl=en

- IEEE SPS LinkedIn Page https://www.linkedin.com/company/ieeesps/

- IEEE SPS YouTube Channel https://www.youtube.com/ieeeSPS