Contributed by Dr. Chongyi Li, based on the IEEEXplore® article, “An Underwater Image Enhancement Benchmark Dataset and Beyond”, published in the IEEE Transactions on Image Processing, 2020.

Introduction

Underwater image enhancement has drawn considerable attention in both image processing and underwater vision. Due to the complicated underwater environment and lighting conditions, enhancing underwater image is a challenging problem. Usually, an underwater image is degraded by wavelength-dependent absorption and scattering including forward scattering and backward scattering. In addition, the marine snow introduces noise and increases the effects of scattering. These adverse effects reduce visibility, decrease contrast, and even introduce color casts, which limit the practical applications of underwater images and videos in marine biology and archaeology, marine ecological, to name a few. To solve this problem, earlier methods rely on multiple underwater images or polarization filters, while recent algorithms deal with this problem by using only information from a single image [1].

Despite the prolific work, both the comprehensive study and insightful analysis of underwater image enhancement algorithms remain largely unsatisfactory due to the lack of a publicly available real-world underwater image dataset. It is practically impossible to simultaneously photograph a real underwater scene and the corresponding ground truth image for different water types. Lacking sufficient and effective training data, the performance of data-driven machine learning algorithms for underwater image enhancement does not match the success of recent vision problems in the deep learning era.

To advance the development of underwater image enhancement, we construct a large-scale real-world Underwater Image Enhancement Benchmark (UIEB). Several sampling images and the corresponding reference images from UIEB are presented in Fig. 1. With the proposed UIEB, we carry out a comprehensive study for several state-of-the-art single underwater image enhancement algorithms both qualitatively and quantitatively. In addition, with the constructed UIEB, CNNs can be easily trained to improve the visual quality of an underwater image. To demonstrate this application, we propose an underwater image enhancement model (Water-Net) trained by the constructed UIEB. The performance of Water-Net outperforms traditional methods.

Fig. 1. Sampling images from UIEB. Top row: raw underwater images taken in diverse underwater scenes;

Bottom row: the corresponding reference results.

UIEB Dataset

In what follows, we introduce the constructed dataset in detail, including data collection and reference image generation.

There are three objectives for underwater image collection: 1) a diversity of underwater scenes, different characteristics of quality degradation, and a broad range of image content should be covered; 2) the amount of underwater images should be large; and 3) the corresponding high-quality reference images should be provided so that pairs of images enable fair image quality evaluation and end-to-end learning.

To achieve the first two objectives, we first collect a large number of underwater images, and then refine them. These underwater images are collected from Google, YouTube, related papers, and our self-captured videos. We mainly retain the underwater images which meet the first objective. After data refinement, most of the collected images are weeded out, and about 950 candidate images are remaining. We present some examples of the images in Fig. 2.

Fig. 2. Examples of the images in UIEB. These images have obvious characteristics of underwater image quality degradation

(e.g., color casts, decreased contrast, and blurring details) and are taken in a diversity of underwater scenes.

With the candidate underwater images, the potential reference images are generated by 12 image enhancement methods, including 9 underwater image enhancement methods, 2 image dehazing methods, and 1 commercial application for enhancing underwater images. With raw underwater images and the enhanced results, we invite 50 volunteers (25 volunteers with image processing experience; 25 volunteers without related experience) to perform pairwise comparisons among the 12 enhanced results of each raw underwater image under the same monitor. For each volunteer, the best result will be selected after 11 pairwise comparisons. The reference image for a raw underwater image is first selected by majority voting after pairwise comparisons. After that, if the selected reference image has greater than half the number of votes labeled dissatisfaction, its corresponding raw underwater image is treated as a challenging image and the reference image is discarded. We totally achieve 890 available reference images which have higher quality than any individual methods and a challenging set including 60 underwater images. View the benchmark dataset.

Water-Net

Despite the remarkable progress of underwater image enhancement methods, the generalization of deep learning-based underwater image enhancement models [2] still falls behind the conventional state-of-the-art methods due to the lack of effective training data and well-designed network architectures. With the UIEB, we propose a CNN model for underwater image enhancement, called Water-Net.

As discussed, there is no algorithm generalized to all types of underwater images due to the complicated underwater environment and lighting conditions. In general, the fusion-based [3] achieves decent results, which benefits from the inputs derived by multiple pre-processing operations and a fusion strategy. In the proposed Water-Net, we also employ such a manner. Based on the characteristics of underwater image degradation, we generate three inputs by respectively applying White Balance (WB), Histogram Equalization (HE) and Gamma Correction (GC) algorithms to an underwater image. Specifically, WB algorithm is used to correct the color casts, while HE and GC algorithms aim to improve the contrast and lighten up dark regions, respectively.

Water-Net employs a gated fusion network architecture to learn three confidence maps which will be used to combine the three input images into an enhanced result. The learned confidence maps determine the most significant features of inputs remaining in the final result.

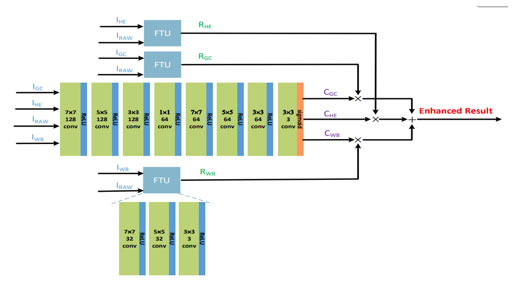

Fig. 3. An overview of the proposed Water-Net architecture. Water-Net is a gated fusion network,

which fuses the inputs with the predicted confidence maps to achieve the enhanced result.

The inputs are first transferred to the refined inputs by the Feature Transformation Units (FTUs)

and then the confidence maps are predicted. At last, the enhanced result is achieved

by fusing the refined inputs and the corresponding confidence maps.

The architecture of the proposed Water-Net and the parameter settings are shown in Fig. 3. As a baseline model, the Water-Net is a plain fully CNN. We feed the three derived inputs and original input to the Water-Net to predict the confidence maps. Before performing fusion, we add three Feature Transformation Units (FTUs) to refine the three inputs. The purpose of the FTU is to reduce the color casts and artifacts introduced by the WB, HE, and GC algorithms. At last, the refined three inputs are multiplied by the three learned confidence maps to achieve the final enhanced result. View the code of the Water-Net.

Experimental results demonstrate the proposed CNN model performs favorably against the state-of-the-art methods and verifies the generalization of the constructed dataset for training CNNs. Same comparison results are presented in Fig. 4. In addition, this benchmark, as training and testing data, has been widely used in following deep learning-based underwater image enhancement works [4], [5].

Fig. 4. Subjective comparisons on underwater images from challenging set.

References:

[1] C. Li, J. Guo, R. Cong, Y. Pang, and B. Wang, “Underwater image enhancement by dehazing with minimum information loss and histogram distribution prior,” in IEEE Transactions on Image Processing, vol. 25, no. 12, pp. 5664-5677, Dec. 2016, doi: https://dx.doi.org/10.1109/TIP.2016.2612882.

[2] C. Li, S. Anwar, and F. Porikli, “Underwater scene prior inspired deep underwater image and video enhancement,” in Pattern Recognition, vol. 98, 2020, doi: https://doi.org/10.1016/j.patcog.2019.107038.

[3] C. Ancuti, C. O. Ancuti, T. Haber, and P. Bekaert, "Enhancing underwater images and videos by fusion," in 2012 IEEE Conference on Computer Vision and Pattern Recognition, Providence, RI, USA, 2012, pp. 81-88, doi: http://dx.doi.org/10.1109/CVPR.2012.6247661.

[4] C. Li, S. Anwar, J. Hou, R. Cong, C. Guo, and W. Ren, “Underwater image enhancement via medium transmission-guided multi-color space embedding,” in IEEE Transactions on Image Processing, vol. 30, pp. 4985-5000, 2021, doi: http://dx.doi.org/10.1109/TIP.2021.3076367.

[5] C. Guo, R. Wu, X. Ji, L. Han, Z. Chai, W. Zhang, and C. Li, “Underwater ranker: Learn which is better and how to be better,” in AAAI Conference on Artificial Intelligence, 2023.

[6] C. Li et al., "An Underwater Image Enhancement Benchmark Dataset and Beyond," in IEEE Transactions on Image Processing, vol. 29, pp. 4376-4389, 2020, https://doi.org/10.1109/TIP.2019.2955241.