2021 IEEE VIP Cup at ICIP 2021: Privacy-Preserving In-Bed Human Pose Estimation

Motivation

Every person spends around 1/3 of their life in bed. For an infant or a young toddler this percentage can be much higher, and for bed-bound patients it can go up to 100% of their time. In-bed pose estimation is a critical step in many human behavioral monitoring systems, which are focused on prevention, prediction, and management of at-rest or sleep-related conditions in both adults and children. Automatic non-contact human pose estimation topic has received a lot of attention/success especially in the last few years in the artificial intelligence (AI) community thanks to the introduction of deep learning and its power in AI modeling. However, the state-of-the-art vision-based AI algorithms in this field can hardly work under the challenges associated with in-bed human behavior monitoring, which includes significant illumination changes (e.g. full darkness at night), heavy occlusion (e.g. covered by a sheet or a blanket), as well as privacy concerns that mitigate large-scale data collection, necessary for any AI model training. The data quality challenges and privacy concerns have hindered the use of AI-based in-bed behavior monitoring systems at home, in which during the recent Covid19 pandemic, it could have been an effective way to control the spread of the virus by avoiding in-person visits to the clinics.

Every person spends around 1/3 of their life in bed. For an infant or a young toddler this percentage can be much higher, and for bed-bound patients it can go up to 100% of their time. In-bed pose estimation is a critical step in many human behavioral monitoring systems, which are focused on prevention, prediction, and management of at-rest or sleep-related conditions in both adults and children. Automatic non-contact human pose estimation topic has received a lot of attention/success especially in the last few years in the artificial intelligence (AI) community thanks to the introduction of deep learning and its power in AI modeling. However, the state-of-the-art vision-based AI algorithms in this field can hardly work under the challenges associated with in-bed human behavior monitoring, which includes significant illumination changes (e.g. full darkness at night), heavy occlusion (e.g. covered by a sheet or a blanket), as well as privacy concerns that mitigate large-scale data collection, necessary for any AI model training. The data quality challenges and privacy concerns have hindered the use of AI-based in-bed behavior monitoring systems at home, in which during the recent Covid19 pandemic, it could have been an effective way to control the spread of the virus by avoiding in-person visits to the clinics.

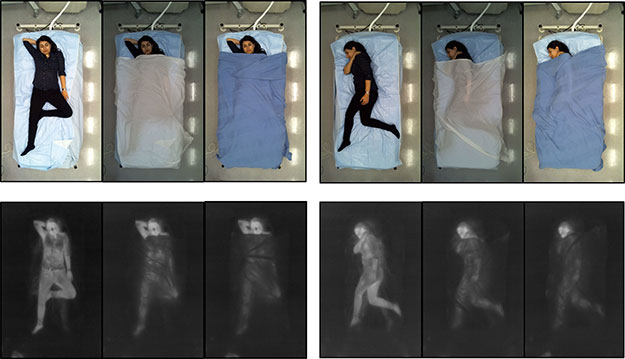

In a recent work, Liu, et al. (MICCAI’19) successfully estimated the human poses via a non-RGB imaging modality (i.e. thermal data based on the long wavelength infrared imaging; LWIR) under heavy occlusion and in total darkness by forming a large-scale dataset called SLP specific on in-bed human poses. However, their successful results were heavily dependent on a training set with a large number of covered examples with full annotation, which is very costly to collect and at the same time requires frequent manual interventions for the labeling. Moreover, the cover conditions captured in the SLP dataset cannot represent all possible occluded cases in the real-world settings such as hospital rooms or nursing homes, where the person could be under a different cover, has an overbed table on their bed, or attached to a monitoring/treatment device. These make it even infeasible to get the ground truth pose labels under such real-world application conditions.

Practically, a realistic way to handle these cases is through developing robust in-bed human pose estimation algorithms that have access only to the labelled uncovered human pose images as well as the unlabelled occluded pose images, which are more practical to collect in a working environment.

Theoretically, estimating human pose from covered labeled cases to unlabeled ones can be deemed as a domain adaptation problem. For the human pose estimation problem although multiple datasets exist such as MPII, LSP and COCO, yet they are mainly RGB images from daily activities, which have huge domain shifts with our target problem. Although domain adaptation topic has been studied in machine learning in the last few decades, mainstream algorithms mainly focus on the classification problem instead of a regression task, such as pose estimation. The 2021 VIP Cup challenge is in fact a domain adaptation problem for regression with a practical application, not been addressed before.

In this 2021 VIP Cup challenge, we seek computer vision-based solutions for in-bed pose estimation under the covers, where no annotations are available for covered cases during model training, while the contestants have access to the large amounts of labeled data in no-cover cases. The compilation of this task enables the in-bed behavior monitoring technologies to work on novel subjects and environments, where no prior training data is accessible.

Dataset Description

Simultaneously-collected multimodal Lying Pose (SLP) dataset is an in-bed human pose dataset collected from 109 participants under two different settings (102 participants from a home setting and 7 participants from a hospital room). Participants were asked to lie in the bed and randomly change their poses under three main categories of supine, left side, and right side. For each category, 15 poses are collected. Overall 13,770 pose samples for home setting and 945 samples for hospital setting are collected in each of the four imaging modalities including RGB, depth, LWIR and pressure map. Moreover, the cover conditions were changed from uncover, to cover one (a thin sheet with approx. 1mm thickness), and then to cover two (a thick blanket with approx. 3mm thickness). In this challenge, the contestants are asked to estimate the human pose in covered conditions without access to any labeled covered examples during training. As the LWIR imaging holds higher practical value for a low-cost in-bed pose monitoring solution, we will focus on this modality for this challenge.

Instead of the whole SLP dataset, the partition and annotation for this challenge is given as:

Train + Validation Datasets: (80 subjects, annotated)

Training Set: (80 subjects)

- Annotated uncovered data from 30 subjects.

- Unannotated data with a thin cover from 25 subjects.

- Unannotated data with a thick cover from another 25.

Validation Set: (10 subjects)

- Unannotated data with a thin cover from 5 subjects.

- Unannotated data with a thick cover from another 5.

The corresponding LWIR and RGB data will be provided. Alignment mapping between RGB and LWIR will be provided.

Test Dataset: (12 novel subjects from home setting, 7 from hospital setting, unannotated)

- home setting: 6 thin cover, 6 thick cover.

- hospital setting: 3 thin cover, 4 thick cover.

- LWIR only will be provided for the test set to simulate a practical application of overnight monitoring when RGB is not available.

The test dataset into two subsets: Test Set 1 and Test Set 2

As the LWIR is already human centered. No bounding boxes are provided.

Schedule (Time need to change according to this year)

Evaluation Criteria

Finalists will be selected according to their test result performance, novelty of their algorithm, the quality of the report, and the reproducibility of their code.

Our metric will rely on the well-recognized metrics in human pose estimation field, namely PCKh@0.5 and PCKh@0.2 and also the PCKh, and the area under curve PCKh-AUC (calculated on a normalized area of PCKh from 0 to 0.5). To focus on the challenging part, our evaluation will be conducted only over the covered cases.

Supported by:

- Northeastern University

- ICIP committee

Organizers

- Augmented Cognition Lab at Northeastern University.

- Sarah Ostadabbas (ostdabbas@ece.neu.edu)

- Shuangjun Liu (shuliu@ece.neu.edu)

- Xiaofei Huang (xhuang@ece.neu.edu)

- Nihang Fu (nihang@ece.neu.edu)