Networked Virtual Reality and Artificial Intelligence for Next Generation Low Vision Rehabilitation

Outline

Virtual reality (VR) holds tremendous potential to advance our society. It is expected to make impact on quality of life, energy conservation, and the economy, and reach a $162B market by 2022. As the Internet-of-Things (IoT) is becoming a reality, modern technologists envision transferring remote contextual and environmental immersion experiences as part of an online VR session. Efficient and intelligent network VR systems can have a most profound impact on healthcare and medicine by enabling novel, previously inaccessible and unaffordable services, delivered broadly and affordably. Thereby, the overarching societal objectives of effectively connecting people, data, and systems, while enabling new technical healthcare infrastructure, will be achieved. This letter highlights an NIH-funded project that aims to accomplish these objectives by the synergistic integration of networked virtual reality and artificial intelligence.

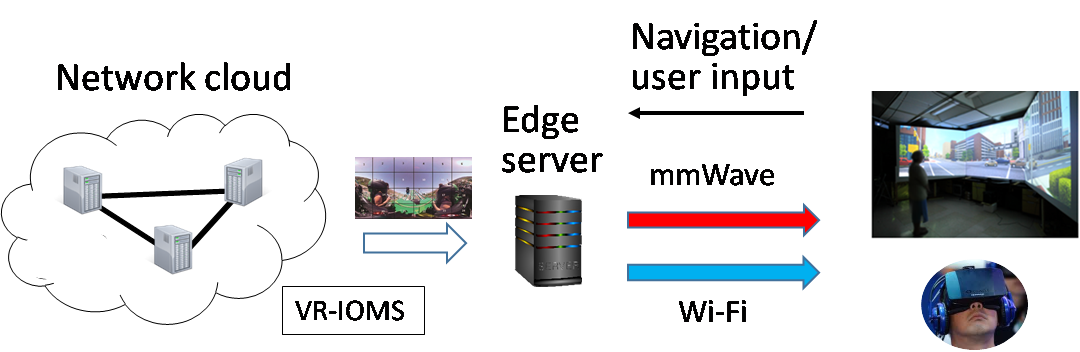

Figure 1. Ultra-Low Latency Network Delivery of 360° Virtual Reality Content and Intelligent Trainers for Automated Low-Vision Orientation and Mobility Rehabilitation

Low Vision and Orientation & Mobility: Background

In 2015, 1.7 million of Americans ≥ 40 yrs had irreversible visual impairment (best-corrected acuity ≤ 20/40) and 0.68% were legally blind (acuity≤20/200). These numbers are projected to double by 2050. There are also 3.6% of children ≤ 18 yrs unable to conduct major daily activities due to visual impairment. Visual impairment severely reduces a patient’s ability to carry-out many activities of daily living and negatively impacts their physical and mental health, financial independence and quality of life. Because no effective treatments are available to reverse visual impairment, visual rehabilitation is the primary intervention. Effective visual rehabilitation restores lost activities through assistive devices and training, improves independence and elevates quality of life. 95% of the visually impaired have some usable vision (low vision, LV). LV individuals use vision as their primary sensory input and LV rehabilitation is focused on maximizing the use of the remaining vision in daily activities.

LV rehabilitation is conducted by trained specialists. They train LV individuals new skills to compensate for the impaired vision. Because this training occurs in real streets, buildings and homes, there is a mandate to have a specialist accompany the LV student throughout the training for safekeeping. To restore total independence, many hours of one-on-one training are needed. These specialists are small in number, compared to the large LV population. They tend to cluster in rehabilitation centers in large cities and their services are usually non-reimbursable. However, individuals with LV have to rely on others to take them to rehab sites. They tend to have low income or are unemployed. Distance to rehabilitation sites, mobility and cost are known barriers that deprive a large portion of the LV population the chance to regain independence. Solutions, such as tele-rehabilitation in the form of video conferencing, have been proposed to overcome the accessibility and affordability barriers to low vision rehabilitation. None have made positive impact because they all failed to address the issue of conducting safe, self-regulated skill training in locations convenient to an LV individual with minimal intervention from the specialists.

The opportunity to develop accessible and affordable low vision rehabilitation has emerged with the recent rapid advances in virtual reality (VR), intelligent tutoring systems (ITS) and network delivery technologies. A VR-based intelligent low vision trainer equipped with sensors and real-time assessment can conduct automated low vision skill training customized to each individual’s visual impairment, functional vision and other personal traits in a safe, content-rich virtual environment. When such trainers are accessed from cloud servers and large-field-of-view high resolution VR content is delivered with ultra-low delay, LV trainees can receive quality skill training at their convenient locations and times with minimum cost.

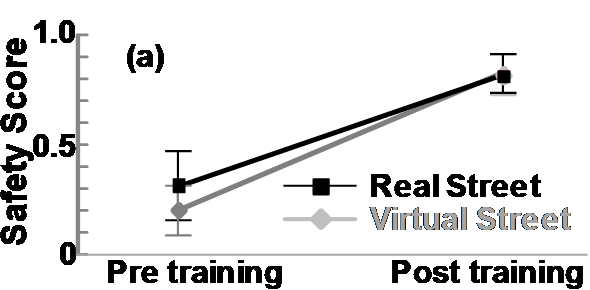

Figure 2. VR-trained O&M skills are transferrable in the real world.

Any VR application has to show transferability, namely, that VR-acquired skills can be used to solve real-world problems. Evidence of transferability has been provided by our team in a recent study of local offline training of orientation and mobility (O&M) skills in VR [1]. O&M is the ability to know one’s spatial location and to travel to a desired destination in an independent, safe and efficient manner. The skills to determine the safest time to start crossing a signalized street were taught in real and virtual streets to individuals whose vision was too poor to use traffic signals. The results (shown in Figure 2) clearly indicate that LV trainees could apply their VR-acquired skills to select the safest time to cross real streets and the effectiveness of virtual street training was as high as real street training.

Project Summary

As part of the NIH project, we are integrating virtual reality with ITS to create VR-based Intelligent O&M Specialists (VR-IOMS). These programs mimic human O&M specialists’ strategies and tactics in teaching a series of O&M skills. A clinical trial on LV individuals, in collaboration with the Alabama Institute for Deaf and Blind, will be conducted to compare the effectiveness of learning O&M skills from the intelligent specialists in virtual streets and learning from human O&M specialists in real streets. NJIT and UAB researchers are also engaged in developing new coding and streaming methods and algorithms to deliver high fidelity, ultra-low latency VR-content generated by cloud-based intelligent specialists to LV trainees through the Internet. The synergy of VR, ITS and network delivery will not only make LV rehabilitation accessible and affordable to all who may benefit from it but also bring paradigm shift changes to other sensory rehabilitation, physical rehabilitation and neurological rehabilitation. A high-level illustration of the network system we are exploring towards achieving our objectives is included in Figure 1 earlier.

Lei Liu* and Jacob Chakareski°

*University of Alabama Birmingham (UAB), °New Jersey Institute of Technology (NJIT)

References

[1] E. Bowman and L. Liu, “Individuals with severely impaired vision can learn useful orientation and mobility skills in virtual streets and can use them to improve real street safety”, PLoS One, 2017.